In a world where dashboards rule decision-making, why should you trust the opinions of 5 people?

It’s natural to reach for big numerical data to address big problems, but in some cases, the numbers just don’t hold the answers. Dashboards are very effectively telling you what the issue is, perhaps even which screen it’s happening on, but what you’re missing is the why.

That’s where deep data from real people comes in. A 30-minute session with 5 people might reveal issues that thousands of data points can’t; like confusing copy, trust gaps, or misaligned expectations. But proposing a feature change on the opinions of 5-10 people is often a hard sell and can get deprioritised in favour of following the numbers. So why should “small”-scale, qualitative data be prioritised just as much as big data analytics?

Numbers are easy to trust, but don’t paint the full picture

Story time. I recently did research with customer service agents on a new assessment-based feature that had been launched. The feature worked well, but adoption wasn’t great. The agents reported that it took too long to do, so they avoided using it.

What kept tripping us up was that the numbers were saying a very different thing to the users. We could see in the analytics that the assessment form didn’t take long to complete. The data reported an average of 12 minutes. This was just 2-4 minutes longer to offer a much better product to the customer. The feature had been stripped down to its simplest form, and the UI was familiar to the agent. So what’s the problem?

Product owners thought that maybe that was because the actual form looked longer. After all, it did have more questions than before, but it was a robust assessment offering more value to the customer. Management implemented extra training sessions, held emergency meetings and basically mandated the heck out of its use.

But still, the agents kept using the old version of the feature.

Research was needed, and fought for (Researcher vs Dashboard smackdown).

When we got to talk to the agents, they told us that the process took too long, not the form. Yes, we saw them move through the form rapidly (well within the 12 minutes). We also saw the 10 minute consultation that happened before the agents even opened a screen. The agents took time before starting to check if they really needed to use the new process for the customer– and that took a long time. Time that was not measured in the dashboard data.

This was because:

- They were worried about the time-based SLA targets when completing the form.

- They worried about getting the information wrong and having their submission rejected by the back-stage professionals vetting the work.

- They were concerned because the new feature had a different incentive structure and using it hit them in the pocket.

The moral of the story is that “Big” data was never going to get us past the adoption issues and drop-off points in this case. Only speaking to the users and understanding their needs got us to the bottom of the issue.

Trust the process, not the volume

The behaviours and insights from 5 people can feel hard to trust, after all, humans naturally gravitate toward big numbers. This hesitation is likely why stakeholders often resist investing in user research. But here’s the thing: neglecting qualitative insights carries its own risks. UX research acts as your safety net, catching critical pain points before you build, launch, or scale something that’s fundamentally flawed.

It’s important to see qualitative research as a field of study in itself, with documented and accepted methodologies. We don’t doubt the detective who builds a case from a single fingerprint or DNA sample, because they followed proper evidence collection procedures and forensic analysis protocols. It is the same for user research. Small data is vital when it’s collected correctly.

The methodology validates the data.

The general understanding is that 5 users will expose around 80% of issues in a usability test (according to Jakob Nielsen, an industry leader). And in fact, speaking to more people often presents diminishing returns from the data, but a rapidly escalating bill for the client. In our experience, with thousands of usability tests over the last 7 years, we have found the same, and is why we opt to keep studies as light as possible (check out our Mini Usability package!).

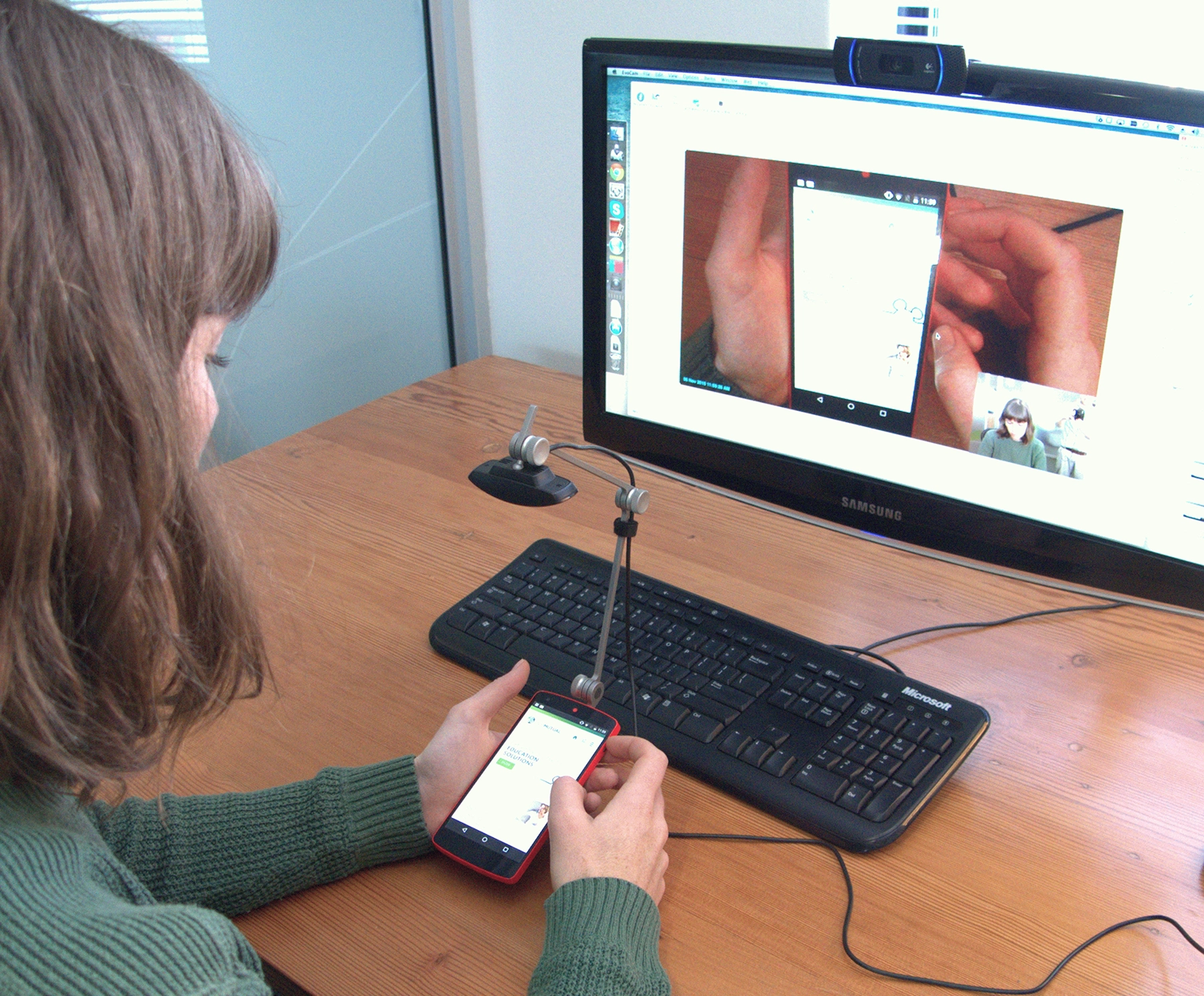

We have, however, found that for in-depth interviews, that’s not about evaluating an experience, but rather learning about customer needs, a good starting number is 10-12 interviews. Demystifying the research process helps if you’re struggling with buy-in. Try inviting stakeholders into the research to see their product through the eyes of the users.

Image credit: HMW archives

Conclusion: Insight Comes in All Sizes

You don’t have to choose.

Trust quantitative data to tell you what’s happening and where. Trust qualitative data to tell you why.

When used together, they give you a full, human-centered view of what’s working and what might need to be improved.

Because sometimes, 5 users are exactly who you need to hear from.